## Voice Activity Detector (VAD)

Voice Activity Detector can be very useful when dealing with interactions between humans and virtual characters. For this reason, VSM comes with an extension that detects when voice activity is present. When used with a noise isolating microphone, we can deduce that a human is speaking. The extension implements the algorithm defined by M. H. Moattar and M. M. Homayounpour.

In this tutorial, you will learn how to make use of this extension.

It is important to mention that this extension is not an instance of an **ActivityExecutor**, instead this is an instance of RunTimePlugin, whichs means that we do not have access to this extension via the Project Editor, so we have to create this project by hand.

1. Create a new project in VSM.You can name it as you want, for this tutorial we named it *VAD*.

2. Save the project in your filesystem.

3. Go with your file browser to the location where you just saved your project and open the **project.xml** file with your favourite text editor

4. Go to the **plugins** section and add the following: `<Plugin type="device" name="VAD" class="de.dfki.vsm.xtension.voicerecognition.VoiceActivityDetectorExecutor" load="true">

<Feature key="variable" val="speaking"/>

</Plugin>`

In this case, we are indicating the extension that we are going to use (VoiceActivityDetectorExecutor), that we want to load it when we open the project, and in the *Feature tag* we indicate a variable name where we could later see the results.

5. Save the project

You should see something like this:

```

<?xml version="1.0" encoding="UTF-8"?>

<Project name="VAD">

<Plugins>

<Plugin type="device" name="VAD" class="de.dfki.vsm.xtension.voicerecognition.VoiceActivityDetectorExecutor" load="true">

<Feature key="variable" val="speaking"/>

</Plugin>

</Plugins>

<Agents>

</Agents>

<Player>

</Player>

</Project>

```

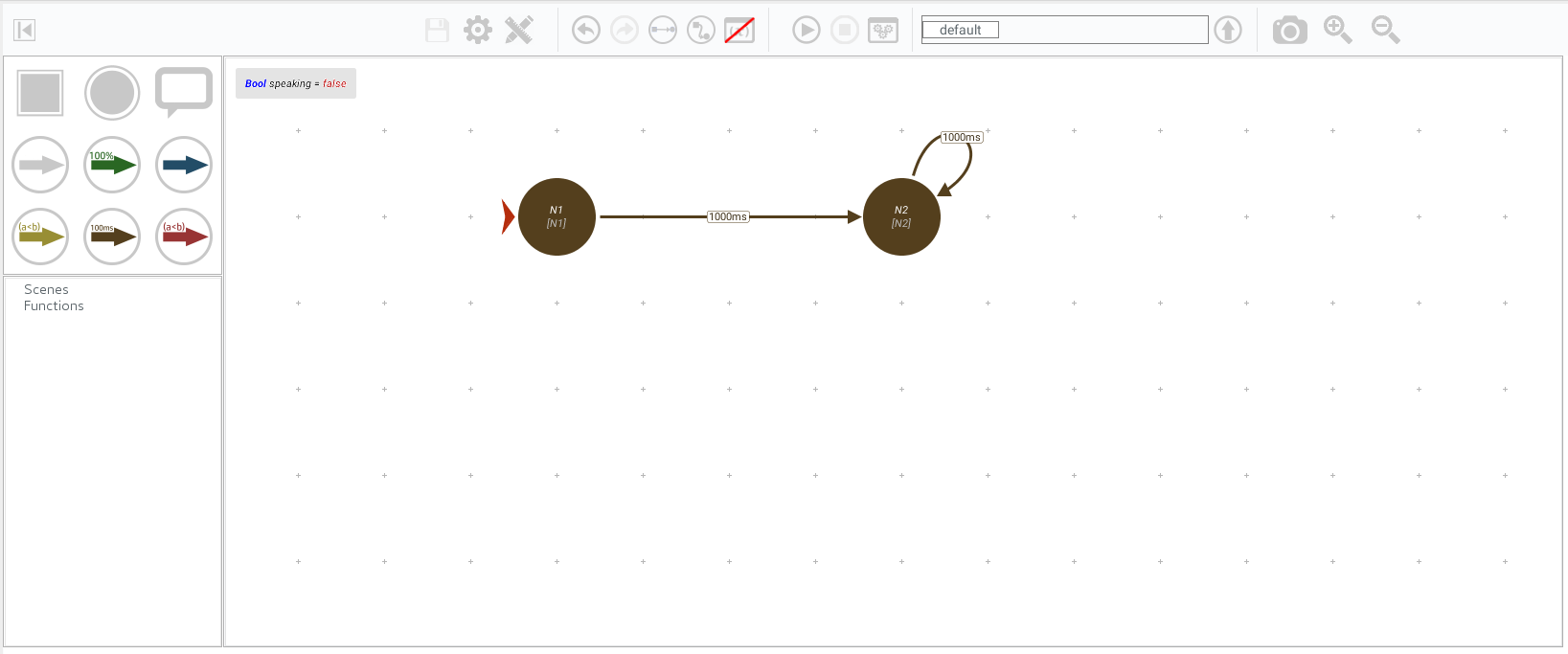

Now is time to create the model in VSM. For doing so, we create two nodes and connect each other with a *timeout edge* and more important, we make a self loop with the last node, this keeps the instance of our model running. Afterwards, and this is very important, create a new boolean variable, with the name that you specified previously in the project.xml file and set its default value to **false** (after all, we are not speaking when we start the app )

Hit the play button and start talking! You will see that the variable that you defined in the project file change to **true**, while you speak, and to **false** in moments of silence.

Note:

Writing to a variable wether someone is speaking or not, is the default behavior of this extension.

In case you want a different behavior everytime the user speaks, you could extend the functionality by implementing the **VoiceNotifiable** interface and adding your own code.

Back to Tutorials Index...